The Future of Data: Global, Distributed, Available

Oil or Sunlight? The Economist introduced the idea of data being like oil back in 2017 calling it “the fuel of the future;” and resurfaced the debate earlier this year when they challenged their earlier posit and got very philosophical about how our lives are steeped in the stuff.

But light or dark, the reality is that data is an essential part of all enterprise level strategy today, and two factors about it must be well understood; its scale and its distribution, as they impact what a business can and can’t do to run their business and serve their lifeblood; their customers’ needs.

We recently convened some experts to share key insights on what decision makers need to understand about data and what choices they needed to be able to make.

Definition, Characteristics and Tips for Adopting a Globally Distributed Data System: The View from the Clouds

The first speaker at this event is noted analyst James Curtis, a Senior Researcher for the Data, AI & Analytics Channel at 451 Research (now part of S&P Global Market Intelligence.) WIth over 20 years’ experience in the IT and technology industry, James is the go-to expert covering data platforms technology and strategy, and he shared insights both foundational

and futuristic.

He kicked off with definitions of Globally Distributed Database; differentiating it from its predecessors Databases and Distributed Databases.

He then shared a telling graphic on how the last is now an overwhelming reality; as shown with a figure of the Public Cloud Locations Open or Announced: AWS, Azure, Google, IBM and Alibaba.

He moved on to share more of 451/S&Ps research findings that showed the expected growth of both median and mean data generated, both now and looking 2 years into the future. Diving deeper into the details he shared findings about where the data is currently stored, vs where it will be in the future (Spoiler: the major move is from on-premise to public cloud).

He summarized this section of the talk with a look at Data Processing Across the Spectrum; that is sharing how companies are gathering and using data in their Core vs at the Edge and Near-Edge. This is an interesting look at how large companies view where data “lands” vs where it is “used” ie analyzed. (If you watch the webinar, you can see this discussed in more detail at minute 12).

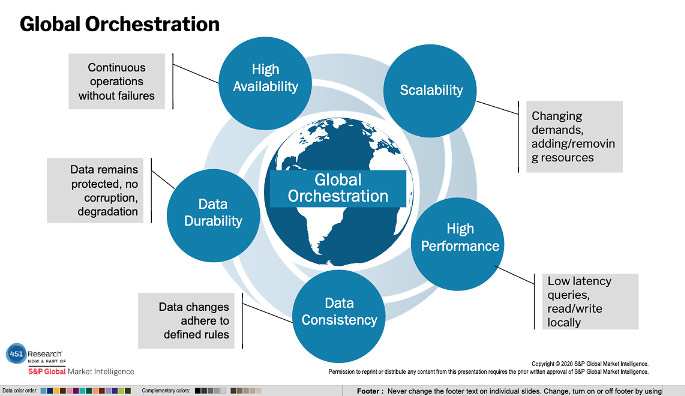

James went to share detailed observations on the key characteristics that data management professionals need a deep understanding of, and a good answer for the choices being made in their organization. Below you can see a diagram that lists the characteristics discussed; with the end result being a global data hub orchestrating the edge to core systems; an optimized architecture for the always-on digital world.

Figure 1: the characteristics required to both protect and fully utilize an organization’s global data resources.

James summarized this with his Tips for Adopting Globally Distributed Databases.

Think globally, act (process) locally

Develop for the cloud, not a cloud vendor

Prioritize the data, technology will follow

Going from observational and theoretical to practical; Aerospike’s Srini Srinivasan, Chief Product Officer & Founder then shared what is possible in general and in three inspiring use cases

Forces driving Geo-Distributed Continental and Intercontinental Transactions

Srini Srinivasan has been a visionary in the realm of what is possible; and where data platforms have been going for more than a decade. When it comes to databases, Srini Srinivasan is one of the recognized pioneers of Silicon Valley with over a dozen patents in

database, web, mobile and distributed systems technologies. Srini co-founded Aerospike to solve the scaling problems he experienced with Oracle databases while he was Senior Director of Engineering at Yahoo.

To kick off his section of the webinar presentation, he led with a simple maxim. Modern applications demand that their database infrastructures be: always available – no planned or unplanned downtime – and always right (no data inconsistencies, such as lost data or conflicting writes).

A data platform that can satisfy those two foundation requirements will do this by focusing on:

Accuracy (Strong consistency)

Speed (better than SQL-based; as good or better than Legacy NoSQL)

Scalability (going beyond TB, to PB and….)

Efficiency = (TCO both efficient on $$, on people’s time; lowest hardware requirements to minimize OpEx and Cap Ex)

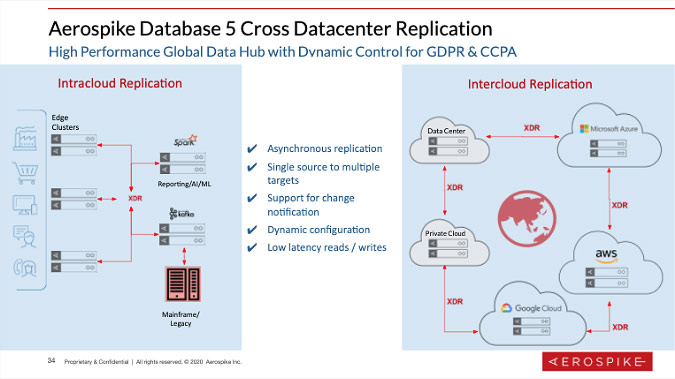

But this part of the talk is no longer theoretical; from these requirements the talk led into the specifics of the solution that Aerospike has architectured (with very recent announcements that focus on the global cross data center replication and support for Cloud Migration.)

Figure 2: Aerospikes’ unique capability to provide high performance Intracloud and Intercloud Cross Datacenter replication.

And then following various diagrams introducing this platform; Srini concluded his observations with inspiring success stories of customers who have been able to take advantage of these groundbreaking capabilities.

He shares how a major European bank provides an instant payment service enabling individuals and firms in various European locations to transfer money between each other within seconds, regardless of the time of day.

The instant payment application require a highly resilient, geographically distributed database platform with strong consistency and reasonable runtime performance to meet their target service level agreements (SLAs); Other solutions didn’t meet the bank’s objectives for resiliency (100% uptime), consistency (no data loss and no dirty or stale reads), and low transaction cost.

Next an overview of how Cross-Data Center Replication is deployed at Nielsen to provide low latency, reliable replication across global data locations. Nielsen stores Ad Tech device information and event history in real-time on billions of users; and in this case, low latency transactions on user objects are paramount to keeping their competitive edge. They conduct real-time modeling and analysis, returning the information to the user in milliseconds.

Finally; a look at how the Aerospike data platform is helping the financial services prevent fraud. We enable LexisNexis | ThreatMatrix organization handle over 130 million transactions a day to manage real-time customer trust decisions, virtually eliminating false positives and greatly enhancing fraud detection. The lack of latency in this vast system enables.

a seamless Identifying trusted/returning customers (matching user-to-device)

Preventing account takeover

Isolating fraudulent transactions

Create global digital IDs – making 80m updates per day

Conclusion and Questions

We were moderated by Stephen Faig, the Research Director of Unisphere Research at DBTA and were able to take some very interesting questions from some attendees including these:

To James: Regarding public cloud capabilities and locations and data growth as drivers for globally distributed systems, can you comment on the applications and industry needs and what use cases you’re seeing? (Short answer: moving money, moving goods and the like.)

To Srini: Can you elaborate on the significance of data being strongly consistent and distributed at internet scale? Is this a typical capability for vendors? (Short answer: While we would say that the combination of strong consistency, high level of performance and distribution is what is required; *most* vendors can only do at most 2 out of 3, while Aerospike has been working tirelessly over the last decade to provide all three.)

You can watch the webinar on demand.