Simplicity – the Secret to Scaling

“That’s been one of my mantras — focus and simplicity. Simple can be harder than complex: You have to work hard to get your thinking clean to make it simple. But it’s worth it in the end because once you get there, you can move mountains.” – Steve Jobs

Aerospike co-founder Srini’s experience scaling Yahoo! Mobile to serve hundreds of millions of users around the world and his desire to sleep through the night, created a singular focus on simplicity when he designed a database system that would manage itself. Anything that could be automated — he automated. Human intervention was only required for monitoring capacity, adding capacity, upgrading servers or troubleshooting the system.

“Simplicity is the ultimate sophistication.” ― Leonardo da Vinci

Meanwhile, Aerospike co-founder Brian’s experience building high performance systems led him to build a database that was no longer the bottleneck. He built a log-structured file system to optimize access to flash/SSD drives, and implemented simple DRAM indexes without contention. This database and storage system is almost as fast as DRAM, but delivers consistent reliable performance — with much lower cost and much higher storage capacity.

The combination of Srini’s self-managing architecture and Brian’s high capacity flash storage architecture allows Aerospike to scale with significantly fewer servers (than memcache or redis) and far more simplicity.

Scaling with Simplicity: 4x Fewer Servers than Memcache or Redis for 1 TB at 50k TPSA common use case we see has:

1TB of data with 500 million objects with 2 KB average size per object

50k Transactions Per Second (TPS)

Balance of 80% reads / 20% writes

Replication factor of two: one primary, one for failover

This use case requires 3 servers using SSDs vs 14 with DRAM. The 14 servers using DRAM could run with two 2.4+ GHz CPUs, 256 GB RAM and 2 TB of free hard disk for persistence of the data in RAM. By contrast, by using SSDs for storage, the 3 servers could be deployed with one 2.4+ GHz CPU, 48 GB RAM and 4 x 512 GB SSDs.

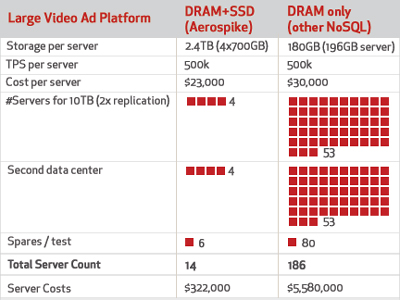

Scaling with Simplicity: 14x Fewer Servers than Memcache or Redis for 5TB at 500k TPS One of the largest online video advertising platforms made some calculations recently to manage:

5TB of unique data

500k TPS, even if 25% of machines failed

Balance of 80% reads / 20% writes

Replication factor of two: one primary, one for failover

2 data centers plus a test cluster

This customer deployed with 14 servers

(4 x 2 data centers + 6 for the test cluster) using SSDs vs 186 (53 x 2 datacenters + 80 for the test cluster) that would have been required with DRAM solutions. They used Micron P320h SLC SSDs and attached 4 x 700GB drives for 2.4TB of usable storage on each server while the DRAM only option would have used 196 GB of DRAM (180GB usable).

According to Brian, “The goal was to compare using the “sweet spot” of DRAM, vs the “sweet spot” of Flash. For DRAM right now, buying servers with about 196GB seemed the best choice in terms of total cost per byte. As you buy larger servers, the density is higher ( you can buy 1T and 2T servers from Dell ), but the cost per byte goes up. With the SSD system, I recommended a very high throughput solution – the Micron P320h SLC SSDs. This is $8/GB, but is nearly as fast as DRAM.”

Servers with DRAM + SSDs cost a total of $23k and servers with DRAM + HDD cost $30k for a total cost of $368k for the DRAM + SSD option versus $5.58M for DRAM + HDD!